Evidence of what? A critique of the smoke, mirrors, and squishy data of the psychotherapy industrial complex

by Blake Griffin Edwards

In recent years, there have been increases in calls for evidence-based practice (EBP) in the field of psychotherapy, which has inevitably led to a kind of sorting—those models which have not been quantitatively validated, to the historical dust bin of shame; and those which can into the cost-focused health insurance machinery. We are seeing everywhere turf wars that vie for a share of the market. In my view, much of the case for ‘evidence-based practice’ therapies are made by use of smoke, mirrors, and squishy data. Please allow me to explain.

The overvaluing of fidelity, the undervaluing of choice

Proponents of many models claiming that their therapy approach is ‘evidence-based’ do so on the basis of small, potentially faulty, and untested research trials. Further, a large number of therapists implementing EBPs may well be failing to replicate the methodologies employed in the original research studies. I do not intend this to be a wholesale critique of EBP research design nor of EBP-utilising therapists but rather a critique of the widely-held assumption that therapists trained and certified in particular EBPs are implementing in practice the methodologies of those EBPs to a level of fidelity comparable to that carried out by therapists participating in the original studies.

The reality is that if therapists implement the methodologies of an EBP but do not implement it to a satisfactory level of fidelity, their practice is not actually evidence-based at all, yet very broad allowances are being made in the coding of EBPs within managed care here in the USA to satisfy the increasingly strict regulatory requirements for levels of EBP implementation, resulting, I fear, in a net reduction in depth and quality of psychotherapy practice rather than an increase in fidelity to effective psychotherapy intervention.

A common critique by EBP sceptics in light of researchers’ claims of tightly controlled studies goes, ‘If your effect is so fragile that it can only be reproduced under strictly controlled conditions, then why do you think it can be reproduced consistently by practitioners operating without such active monitoring or controls?’ If fidelity to a manualised modality cannot be ensured beyond the randomised controlled trials that stamped it ‘evidence-based’, how do we know, in the marketplace, that it is so?

Research findings based on the application of treatment manuals have led to endorsement of treatment brands which assume that these are practiced in a manner consistent with the research treatment manuals. Very often, they are not. In effect, the endorsement of a brand name treatment is a shortcut to - and a means of - defining de facto clinical practice guidelines and gaining a market monopoly. But far more importantly, many EBPs rigidly structure for therapists and, thereby, for clients, systems of levers to pull, should the client’s esteem tip this way or should the client’s fears tip that way. In my experience, evidence-based practice cadres often do not have an interest in the personal agency of the client—in their capacity to choose for themselves and the innate strengths and resilience that can emerge given the right kind of supportive conditions. While the spirit and principled mindset of a field of evidence-based practice is appropriately postured to mitigate potentially negligent and dangerous practices, far more widely than is widely acknowledged, EBP implementation takes the form of naive acceptances of poorly tested interventions and, in effect, may or may not ultimately ensure better therapy.

Donald Berwick, a Harvard-based quality-improvement expert, himself noted for employing evidence-based methods in the field of medicine, wrote in 2005 that we had “overshot the mark” and turned evidence-based practice into an “intellectual hegemony that can cost us dearly if we do not take stock and modify it” (315). In 2009, advocating for ‘patient-centred care’, he declared, “evidence-based medicine sometimes must take a back seat” (561). His sentiment applies to the field of psychotherapy as well.

The American Psychological Association unveiled a policy in 2005 recognising that to practise from an evidence base, findings based on research are insufficient. The policy characterises evidence-based psychological practice (EBPP) as incorporating evidence about treatment alongside expert opinion and an appreciation of client characteristics. Three components—evidence for treatment, expert opinion, and patient characteristics— are essential to writing clinical practice guidelines and thereby enhancing the delivery of evidence-based treatments (APA Task Force on Evidence-Based Practice, 2006).

The APA policy stated, “A central goal of EBPP is to maximize patient choice among effective alternative interventions” (284). These days, many practices claiming to work from a so-called ‘evidence base’ in practical fact minimise client choice.

The baby and the bath water

There is no wholesale dismissal of evidence here, only of the errors of blind acceptance of a widely criticised and underperforming field of EBP research that has oversold to the unscientific public the merits of many findings. The reality is that subjective aspects within and incentives related to psychotherapy outcome research leave the field vulnerable to corruption in research data that may be construed as ‘evidence’. We, as practitioners and consumers, should be asking ‘Evidence of what?’

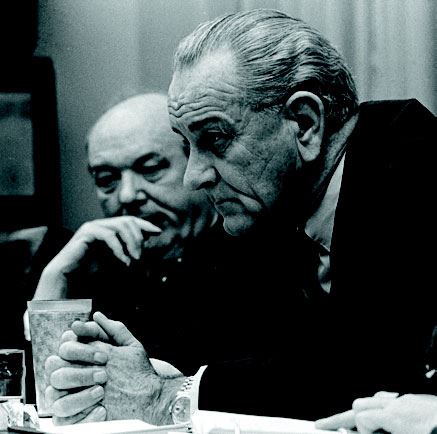

Robert McNamara was the U.S. Secretary of Defence from 1961-1968, during the Vietnam War. McNamara saw the world in numbers. He spearheaded a paradigm shift in strategy at the U.S. Defence Department to implement large-scale metric tracking and reporting that he contended would help minimise individual bias among department experts. A core metric he used to inform strategy and evaluate progress was body count data. ‘Things you can count, you ought to count,’ argued McNamara.

His focus, however, created a problem because many important variables could not be counted, so he largely ignored them. This thinking led to wrongheaded decisions by the U.S. and resulted in an eventual need for withdrawal from the Vietnam conflict. Social scientist Daniel Yankelovich (1972) coined the term, the ‘McNamara fallacy’, pointing out a human tendency to undervalue what cannot be measured and warning of the dangers of taking the measurably quantitative out of the complexity of its qualitative context:

The first step is to measure whatever can be easily measured. This is OK as far as it goes. The second step is to disregard that which can’t be easily measured or to give it an arbitrary quantitative value. This is artificial and misleading. The third step is to presume that what can’t be measured easily really isn’t important. This is blindness.

The fourth step is to say that what can’t be easily measured really doesn’t exist. This is suicide.

(Yankelovich, 1972: 72)

Sociological researcher William Bruce Cameron put it another way, “Not everything that counts can be counted, and not everything that can be counted counts” (1963: 13).

I wish the reader will simply take for granted that I do not intend to throw the baby out with the bath water—that there are, indeed, many good reasons our field should be expanding research in and implementation of evidence-based practices. I assume, however, that on the basis of what I have written thus far, many readers are at risk of concluding that I am simply ignoring the case for evidence-based practice and EBP research. Let me erase that assumption. The continued development of niche cadres of evidence-based practices, within proper bounds and with proper accountability, has great promise. In short, I see three primary benefits of EBP:

- Research-backed therapy interventions operationalised in manuals and delivered by trained therapists offer significantly increased efficacy in treating certain disorders (Wampold, 2001; Roth & Fonagy, 1997; Nathan & Gorman, 1998).

- Consistency in treatment intervention can reduce therapist variability which will likely increase efficacy in treating certain disorders (Luborsky & Barber, 1993).

- When we emphasise the need for evidence-based skill sets, we elevate in value and priority the significance of ensuring effective therapeutic treatment with clients, including our knowledge about what works and with whom (Norcross, 2002).

To be clear and lest I gain a reputation for what I am against: I support online toolkits that integrate and track data collection as part of EBP research and case consultation; I support any time systems of care that promote and validate the complementary paradigm of ‘practice-based evidence’, providing a means for therapists to generate support of what works for clients based on professional experience; I support grant funding for promising practices; I support serious implementation of confidential and peer-reviewed feedback systems; I support specialised practice cadres which promote niche clinical skills and clinical integrity intending to promote positive therapeutic outcomes; and I support epistemological pluralism, a contrast to placing value through reductionism on only certain aspects of therapeutic outcome.

Healing rhetoric

There is often a contradiction between so-called objective clinical measurement and the subjective experience of the person before us. I must cultivate therapeutic space to come to know the whole person, yet this begs the question of what this ‘knowing the whole person’ entails.

Scientists who study the oceans know some things about waves, but they can hardly predict the behaviour of a particular wave, when it will come or when it will go. Still, they know a great deal about the tides, about the forces that influence and participate in the larger oceanic ecology. Larger scientific principles and findings might, then, provide opportunity to deduce how best to understand and work in relationship with particular waves or—allow me this stretch—families of waves. On the other hand, inductively, we can study a particular wave and hypothesise about the nature of waves.

We are limited in our knowing, but that does not negate knowing. We are limited in our influence, but that does not negate our influencing. Yet to know and to do wisely is to see a larger web of value and life. Life is far more complex than our theories and our models give it credit for. And, sometimes it is far simpler. Your average surfer would be reticent to speak of oceanic ecology or very much about tides, yet a good surfer knows a great deal about waves. It would not be uncommon for a passionate surfer to go on and on about a particular wave with great nuance. As meaning and being underlie thinking and behaving, I must join clients in the small and cramping space where they are trapped. We must learn to be persons with other people, and this often involves being playful and creative—not purely rational.

We are asked in the helping professions to engage in services guided by sound clinical reasoning, and increasingly, by ‘evidence’ which really means that helpers are widely expected to be guided by some pure, logical psychotherapeutic source, be that by deductive hypothesising from validated clinical measures or by inductive reasoning from evidence-based research. Yet the behavioural sciences do not deal in anywhere near the kinds of absolutes as mathematicians and physicists, who study domains in which dynamics of cause and effect are more realistically isolated into forces and impacts. The method with which so much of science calculates and intervenes is through a reasoning predominantly of the quantitative sort; yet we who study the ultra-complex, qualitative multiverse of mind and behaviour continue to be pressed to demonstrate empirical bases for our actions. For us, no pure reason exists.

Many evidence-based practice models are designed with unrealistic controls. Some rely on diagnostic controls in which therapists are to follow certain intervention protocols on the basis of a client’s particular diagnostic formulation. Yet what controls exist to ensure diagnostic precision? Over my years of practice, I have witnessed countless cases in which clients have been assigned disparate diagnoses across systems of care, in which psychotherapists, clinical psychologists, neuropsychologists, psychiatric providers, and primary care providers have committed to incompatible diagnostic conclusions, in which time and again proactive and conscientious cross-silo, interdisciplinary case collaboration has proven ineffectual to remedy. You can imagine the consequences for treatment against the guidance of a manual reliant on a firm diagnostic bearing.

Faith, hope, relationship and an unfathomable number of other factors impossible to quantify or procedurise, many external to the therapeutic enterprise, may catalyse transformation. We should be cautious of increasing demands for ‘evidence’, remain wary of evidence-based claims, and grapple with the unassailable reality that there is a vast gulf between the diagnosable problems as seen through the lens of clinical expertise and the essence and worth, strengths and hopes of the person before me.

We must lean back into the sort of ‘healing rhetoric’, the study of meanings and the art of persuasion, urged by Jerome Frank (1961) in Persuasion and healing, in which therapeutic alliance is part and parcel to the skill of intervention, and in which intervention feeds the alliance in healing, human ways. If a psychotherapist is lifeless or if his technique too technical, his efforts to help may be worthless. Therapy, in this case, is not relationship but a poor excuse for scientific experimentation.

Yet we are all too willing to please and play the game with endless attempts to garner incontrovertible, scientific justifications for our very necessarily intuitive psychotherapeutic inclinations or even to impose onto our boundless sensibilities rigid conceptualisations and applications which fail to fathom the enigmas of the particular ecologies of the persons with, not on, whom we work. We can do better.

Blake Griffin Edwards is a licensed marriage and family therapist, behavioral health executive at Columbia Valley Community Health, and governing board chair for the North Central Accountable Community of Health. Blake has authored many publications on psychotherapy and authors a blog for Psychology Today called “Progress Notes.” Blake lives with his wife and twin daughters in north central Washington State, USA.

This article is an adapted excerpt from a chapter titled, ‘The empathor’s new clothes: When person-centred practices and evidence-based claims collide’ from the book Re-visioning person-centred therapy: Theory and practice of a radical paradigm (Routledge, 2018). Permission granted to reprint.

References

APA Task Force on Evidence-Based Practice. (2006). Evidence-based practice in psychology. American Psychologist, 61, 271–285. DOI: 10.1037/0003-066X.61.4.271.

Berwick, D. M. (2005). Broadening the view of evidence-based medicine. Quality and Safety in Health Care, 14, 315–316. Berwick, D. M. (2009). What ‘patient-centered’ should mean: Confessions of an extremist. Health Affairs, 28(4), 555–565.

Cameron, W. B. (1963). Informal sociology: A casual introduction to sociological thinking.

Random House.

Frank, J. D. (1961). Persuasion and healing. The Johns Hopkins Press.

Luborsky, L., & Barber, J. E (1993). Benefits of adherence to treatment manuals, and where

to get them. In N. Miller, L.Luborsky, J. P. Barber, & J. P. Docherty (Eds.), Psychodynamic treatment research: A handbook for clinical practice (pp. 211-226). Basic Books.

Norcross, J. C. (2002). Psychotherapy relationships that work: Therapist contributions and responsiveness to patients. Oxford University Press.

Roth, A., & Fonagy, P. (1997). What works for whom? A critical review of psychotherapy research. Guilford Press.

Yankelovich, D. (1972). Corporate priorities: A continuing study of the new demands on business. D. Yankelovich Inc.